I spent the last few weeks combining my Obsidian graph with GPT vector embeddings, creating a fully personal, fully local, fully operable Google for my personal notes.

And it got me thinking.

What *is* search?

Why do we search for things in the first place?

Is search a shortcut to truth?

Is search a pathway to satiate our bottomless desire as a species for information?

Perhaps it’s a way to feel connected, to come to terms with unanswered questions, to commune with the Oracle of time and space.

Whatever search is, we all do it. A lot.

Google has been visited 62.19 billion times this year. (yes, I googled that, so 62.19 billion + 1)1

People have questions, and the Internet has answers. Or does it?

We Ourselves are Producers of Data

We all are active producers of data, even when we consume data.

There is no such thing as an independent observer. By interacting with the web, you become the web.

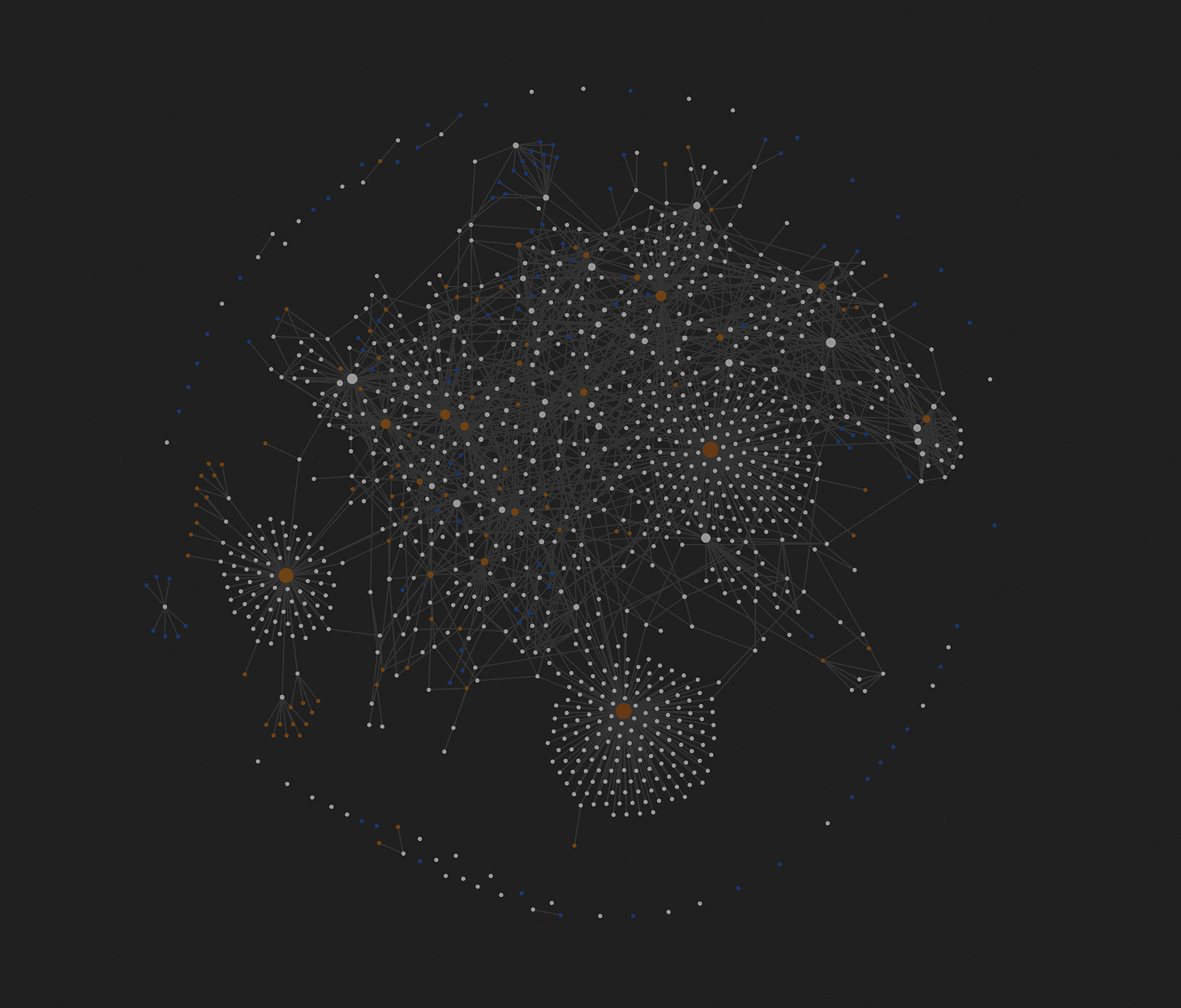

The picture above illustrates my personal web of information, my Zettelkasten.2

My Zettelkasten is a collection of my ideas, thoughts, concerns, opinions, preferences, revelations, conflicts, experiences, and many many more.

If you’ll notice, everything is connected to everything else. Sure, some nodes get visited infrequently like a rural town buried in the mountains, some nodes drift along doing their own thing like a digital nomad living out of her suitcase. But all of the nodes are purposeful and have some relationship with the overall graph.

Where Does Our Data Go?

For many, data is consumed and then lost to the flow of time in favor of the final experience. The wood shavings are scattered across the workshop floor, waiting to be swept into the proverbial trash can.

But data is flexible.

Data can be re-contextualized, reshaped at will. Data can, in a sense, revive itself from “death”.

Data can have a brand new life when it is merely examined from a different angle.

If we are willing to store our experiences on the web in storage systems we trust, that data does not need to be transient. It does not need to be subsumed by the environment it is a part of.

It can be assimilated.

And that’s where embeddings comes in.

Embeddings and Stuff

The main workflow of Google is to:

index data

store the data

return relevant data on query

With Embeddings3, we can do the same thing, at a fraction of the financial cost and a fraction of the data lock in.

Let’s break down each step.

The Right Stuff

Not all data is useful. In fact, most data is noise outside of the specific context in which it was made.

The first step to getting valuable data is to chunk.

Chunkin’

To chunk data, we are merely splitting it into useful fractions.

For this blog post, a good chunk candidate would be line breaks. For a video, it could be jump cuts.

Whatever it is, it must be atomic enough to be standalone, yet large enough to hold enough relevant information.

| /** | |

| * a chunking algorithm that splits a string into chunks of a maximum length as dictated by max tokens allowed by the API | |

| * Does not respect spaces, so it is not suitable for splitting text into sentences | |

| * @param document document to be chunked | |

| * @returns chunks of the document | |

| */ | |

| export function chunkDocument (document: string): string[] { | |

| const chunks: string[] = [] | |

| let chunksAboveTokenLimit = [] // [true, false, etc] want all to be false | |

| let numOfSubdivisions = 0 | |

| chunksAboveTokenLimit.push(checkLength(document)) | |

| while (chunksAboveTokenLimit.includes(true)) { | |

| numOfSubdivisions++ | |

| chunksAboveTokenLimit = [] | |

| let maxChunkLength = Math.floor(document.length / numOfSubdivisions) | |

| for (let i = 0; i < numOfSubdivisions; i++) { | |

| const chunk = document.slice(i * maxChunkLength, (i + 1) * maxChunkLength) | |

| chunksAboveTokenLimit.push(checkLength(chunk)) | |

| } | |

| } | |

| if(numOfSubdivisions > 1) { | |

| let maxChunkLength = Math.floor(document.length / numOfSubdivisions) | |

| for (let i = 0; i < numOfSubdivisions; i++) { | |

| const chunk = document.slice(i * maxChunkLength, (i + 1) * maxChunkLength) | |

| chunks.push(chunk) | |

| } | |

| } else { | |

| chunks.push(document) | |

| } | |

| return chunks | |

| } |

We then embed each chunk, turning text into a 2D Vector representation.

Store It in a U-Haul!

Next, we need a place to put all these chunks. A database seems like a reasonable option.

| export async function writeObsidianDocumentToPostgres(prismaClient: PrismaClient, obsidianDocument: { | |

| filename: string; | |

| chunks: string[][]; | |

| embeddingsResponse: { | |

| embeddings: number[][]; | |

| text: string[]; | |

| }; | |

| }) { | |

| return prismaClient.obsidian.create({ | |

| data: { | |

| doc: obsidianDocument, | |

| filename: obsidianDocument.filename, | |

| } | |

| }).then((result) => { | |

| return result; | |

| }) | |

| .catch((error) => { | |

| console.log(error); | |

| return error; | |

| }); | |

| } |

Query It Like Beckham

Finally, we use cosine similarity4 (a pretty cool function if I do say so myself) to query all this new data we created.

| /** | |

| * Run cosine similarity search on all documents in the database against a list of queries, and return the top {numResults} results | |

| * @param documentEmbeddings number[][] - embeddings of source document | |

| * @param queryEmbeddings number[][] - embeddings of queries (queries are a list) | |

| * @param numResults number - number of results to be returned | |

| * @returns SearchResult[][] - list of results | |

| */ | |

| export function search(documentEmbeddings: number[][], queryEmbeddings: number[][], numResults: number = 3): SearchResults[][] { | |

| const results: SearchResults[][] = []; | |

| queryEmbeddings.map((queryEmbedding) => { | |

| const similarityRankings = documentEmbeddings.map((vector, i) => { | |

| const similarity = cosineSimilarity(vector, queryEmbedding); | |

| return { | |

| index: i, | |

| similarity, | |

| vector | |

| } | |

| }) | |

| .sort((a, b) => b.similarity - a.similarity); | |

| const slicedRankings = similarityRankings.slice(0, numResults); | |

| results.push(slicedRankings) | |

| }); | |

| return results; | |

| } |

Wrapping Up

The search of tomorrow involves data captured today. Pay attention to the trail your data leaves behind, and ask yourself, could this have another life?

ars longa, vita brevis

Bram

https://www.google.com/search?q=total+google+searches+in+2021&oq=total+google+searches+in+2021&aqs=chrome..69i57j0i22i30l3j0i390l4j69i64.5548j1j7&sourceid=chrome&ie=UTF-8

https://www.bramadams.dev/slip-box/Publish+Home+Page

https://beta.openai.com/docs/guides/embeddings/what-are-embeddings

https://en.wikipedia.org/wiki/Cosine_similarity